How to use Mistral AI on macOS with BoltAI

What is Mistral AI

How to obtain a Mistral AI API Key

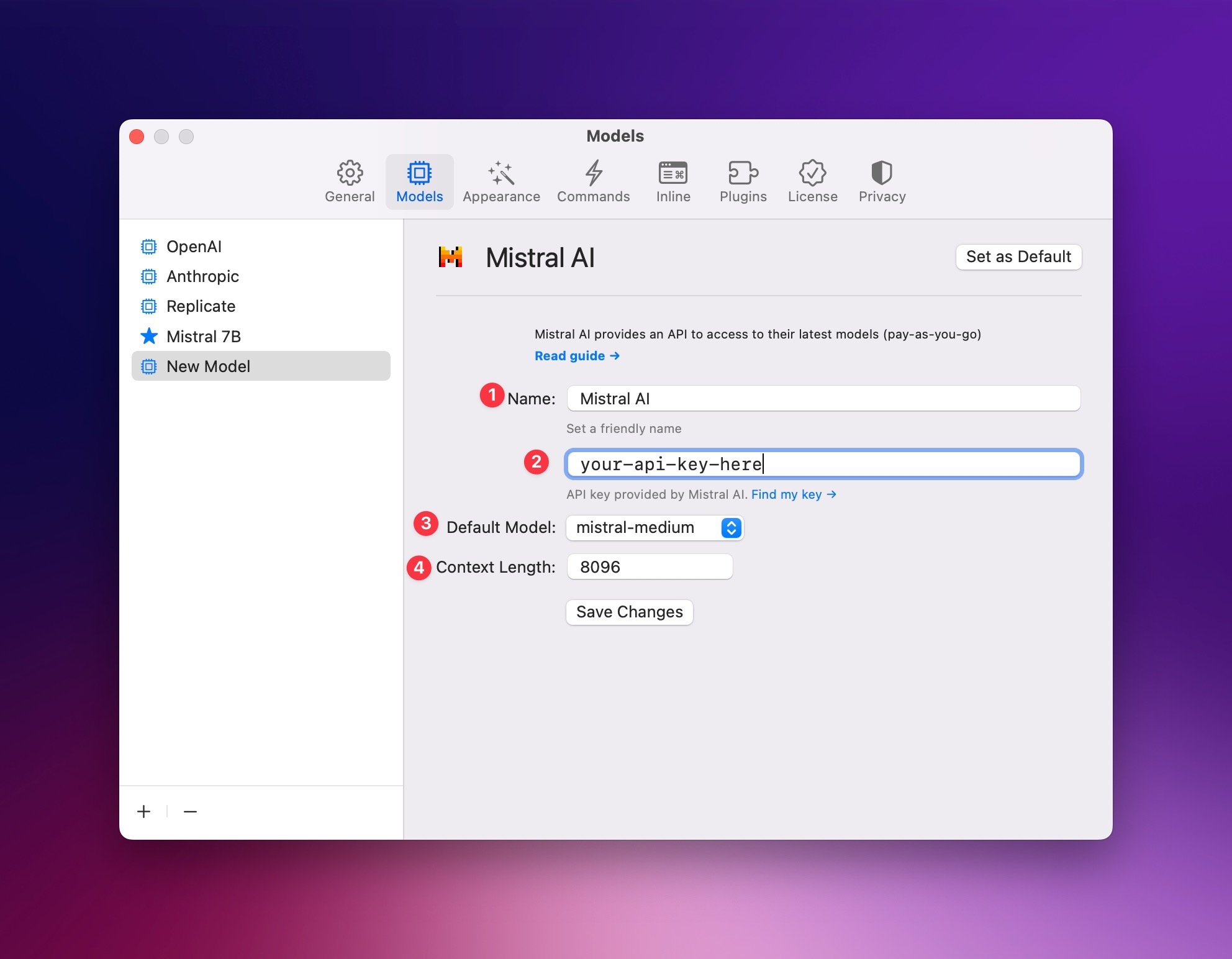

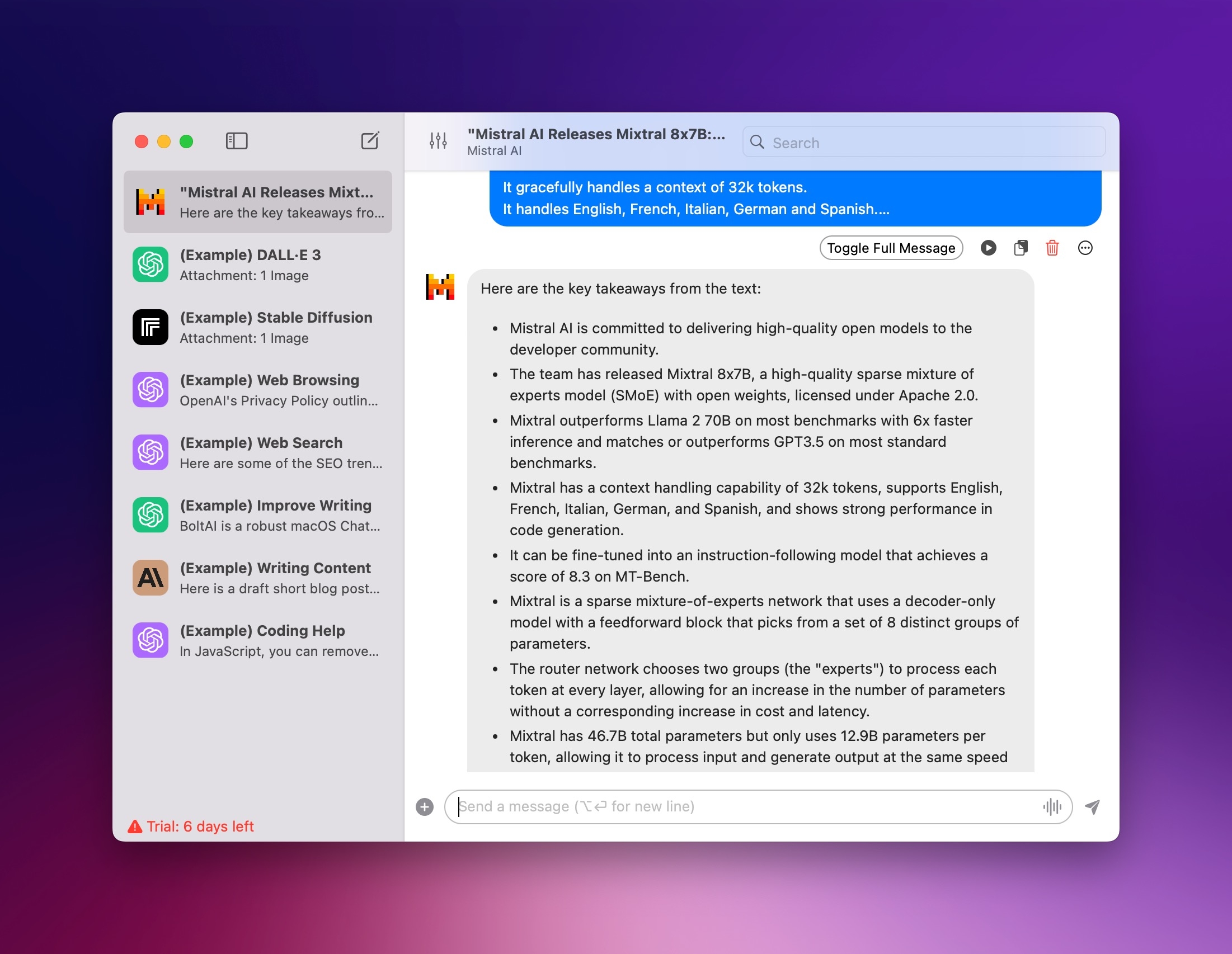

How to use Mistral AI on macOS with BoltAI

PreviousHow to set up a custom OpenAI-compatible Server in BoltAINextHow to use Perplexity AI on mac with BoltAI

Last updated